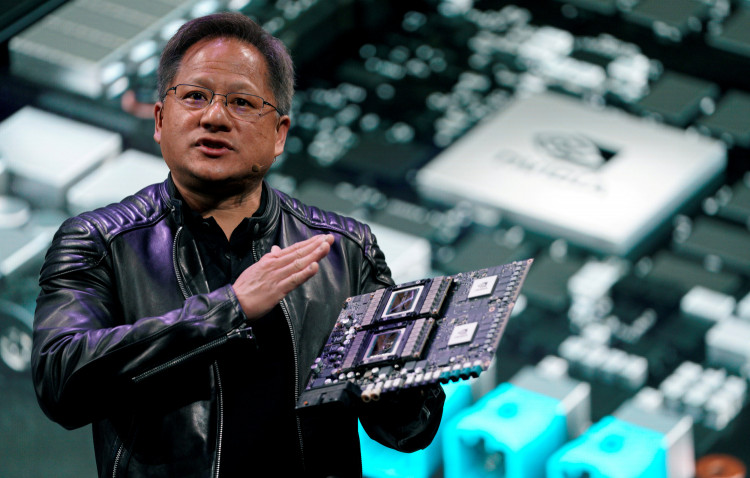

Nvidia has once again captured the industry's attention with its announcement of the Blackwell series of AI chips. This new generation of processors, as revealed by Nvidia's CEO Jensen Huang, is expected to command a price tag ranging between $30,000 and $40,000 each, underscoring the high stakes and substantial investments driving advancements in AI technology.

The Blackwell chip, heralded for its cutting-edge capabilities, represents a significant leap forward in Nvidia's AI offerings, building upon the momentum generated by the company's H100, or "Hopper" generation chips. These predecessors, which were introduced in 2022, already marked a substantial price increase from prior models, reflecting the escalating costs associated with leading-edge AI research and development, which Nvidia estimates at around $10 billion.

As Nvidia gears up to launch Blackwell, the tech community is abuzz with speculation about the chip's potential to revolutionize the training and deployment of AI software, including sophisticated models like ChatGPT. The promise of enhanced speed and energy efficiency with Blackwell is particularly enticing for top AI companies and developers, who have relied heavily on Nvidia's H100 GPUs over the past year. Giants in the industry, such as Meta, have already announced plans to acquire hundreds of thousands of H100 GPUs, indicating the critical role Nvidia's technology plays in powering the AI revolution.

Nvidia's strategic move to introduce multiple versions of the Blackwell AI accelerator, including the B100, B200, and the dual-chip GB200 paired with an Arm-based CPU, showcases the company's commitment to offering tailored solutions to meet diverse AI computing needs. These new models boast varying memory configurations and are slated for release later this year, further cementing Nvidia's position at the forefront of AI innovation.

The Blackwell series' unveiling at Nvidia's annual developer conference was complemented by the introduction of a quantum computing service and a suite of tools aimed at advancing general-purpose humanoid robotics. This bold expansion of Nvidia's portfolio into quantum computing and robotics underscores the company's ambition to redefine the boundaries of what AI technology can achieve.

Among the Blackwell lineup, the GB200 "superchip" stands out for its ability to combine two B200 chips with Nvidia's Grace CPU on a single board. This design choice significantly enhances efficiency by minimizing inter-chip communication delays, thereby maximizing processing power for AI applications. The GB200's promise to deliver "30x the performance" for AI server farms while potentially reducing energy consumption by up to 25 times illustrates Nvidia's commitment to not only advancing AI capabilities but also addressing the industry's growing energy concerns.

For those seeking even greater computational power, Nvidia's offerings extend to the GB200 NVL72 and the DGX Superpod, the latter of which integrates eight racks of B200 chips into a single, container-sized AI data center. While pricing details for these behemoths remain undisclosed, their introduction signals Nvidia's readiness to meet the demands of even the most ambitious AI projects.